Multicloud portability for Go Applications

18 Oct 2020Often teams want to deploy robust applications in multi-cloud and hybrid-cloud environments, and migrate their workloads between cloud providers or run on multiple cloud providers without significant changes to their code. To achieve this, they attempt to decouple their applications from provider-specific APIs by building generic interfaces to produce simpler and more portable code.

However the short-term pressure to ship features means teams often sacrifice longer-term efforts toward portability. So how can Go developers achive this portability?

Before we understand how we can do that, it’s important I believe, to explore the following

- why Go is “language of the cloud”?

- why should I care about multicloud portability?

- Is kubernetes == cloud portability?

Go is the language of the cloud

Over a million developers across the globe use Go on a daily basis. Companies like Google, Netflix, Crowdstrike and many more are depending on Go in production. Over the years, we’ve found that developers love Go for cloud development because of its efficiency, productivity, built-in concurrency, and low latency.

Go has played a significant role in the creation of cloud-related, cloud-enabling technologies like Docker, Kubernetes, Traefik, Cilium, LInkerd and many other. In fact, I think it’d be reasonable to say that the modern cloud is written in Go. A number of years ago an analyst wrote that Go is the language of cloud infrastructure, and I think that has been proven overwhelmingly true — most cloud infrastructure things have been written in Go.

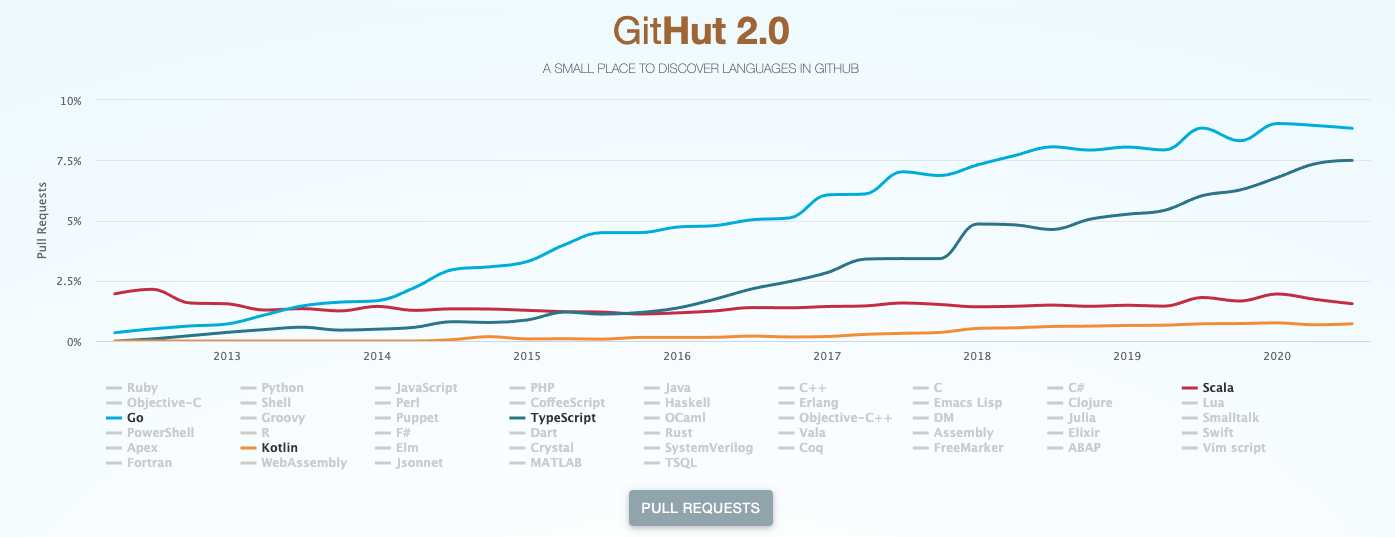

Go OSS Contributions 2013 - 2020

Go has been continuously growing in the past decade, especially among the infrastructure teams and in the cloud ecosystem.

And we’re increasingly seeing that Go is not only the language of cloud infrastructure but the entire language of the cloud. We’re seeing more significant adoption of Go applications on the cloud than other languages.

If you’e interested in exploring why Go is such a key language in cloud space I refer you to this awesome blog

Ok Go is awesome, but why should I care about cloud portability?

One common theme with teams at many organizations is the need for portability across cloud providers. These teams want to deploy robust applications in multi-cloud and hybrid-cloud environments, and migrate their workloads between cloud providers without significant changes to their code.

But why is portability important for cloud workloads? Most organizations adopt a multicloud strategy out of a desire to avoid vendor lock-in or to take advantage of best-of-breed solutions.

As technology becomes the center of competitiveness for nearly every enterprise today, companies have to think about being able to survive a widespread outage or a security vulnerability. Multicloud operations spread the risk of service disruption across a larger surface area. Think of it as an investment-diversification strategy.

While the cloud famously gave us hourly, and now even by-the-minute pricing, major deals in the cloud are negotiated, not bought at pricing off of the website. Simply put, you are in a much better position to negotiate if you can prove the ability to move workloads to another provider offering better terms and this is especially true if you are operating at scale.

Lastly, despite the trend towards so-called commoditization, there are differences between clouds that lend themselves to app placement for reasons other than diversification and negotiating leverage. Some clouds are in certain regions whereas others are not. Some have better workflows for certain applications. Just as modern development teams don’t want central IT to tell them to use any database as long as it is Oracle, app teams don’t want to deploy in an environment that is ill-suited to the task at hand.

Okay, so portability is important and desirable in theory. Now, let’s see how it works in reality. We start with a business problem and we aim to solve it by designing an application, developing it, test it and the whole shebang.

But in this cloud native era, we seldom build applications in isolation. Often times we pick a cloud provider to deploy and run our application. This gives us the leverage to support our application with some of the managed services provided by the cloud provider like blob storage, databases etc. This is great cause this reduces the code footprint, so less bugs and improves speed, efficiency and reliability of the entire application. So far so good.

For whatever reason let’s say that we have decided to migrate to a different cloud provider. If we have no pressure to build new features and have all the time that we need, we can make the code changes that are necessary by updating the code that’s interacting with cloud provider’s SDK and could complete the migration.

But we are all good developers some 1x, some 10x and some unicorns apparently! Our deep understanding of design patterns motivated us to come up with a better looking design. Instead of hard coding the cloud provider specific logic this time we actually introduced separation of concerns.

Our Application now talks to an interface layer that in turn talks to a cloud provider specific SDK. So next time when we want to migrate to a different cloud or run the app on a different cloud in parallel with the initial cloud we can just change the interface reducing the changes required to the actual application logic. This is much quicker than rewriting the whole application and understandably prone to less errors as well.

This is definitely an improvement than hardcoding cloud provider specific logic in the context of portability.

Instead of just choosing to migrate to a different cloud provider, what if we actually want to run the application on both clouds. Even though this is clearly possible thanks to our new application design which incorporates separation of concerns, it is not hard to see how it can get really tricky, complicated and time consuming to design such a provider agnostic interface that can liaise with multiple cloud providers.

Also, when we started this exercise we made two assumptions 1. There is no pressure to develop new features and 2. There is unlimited time. In reality though, the short-term pressure to ship features means teams often sacrifice longer-term efforts toward portability. As a result, most Go applications running in the cloud are tightly coupled to their initial cloud provider.

Isn’t Kubernetes == Portability?

Doesn’t Kubernetes already make this possible? I mean as long as we have Kubernetes deplpyments in a Kubernetes cluster it doesn’t really matter where that cluster is running. So can’t we just pick this Kubernetes deployment and run it in another cloud provider’s Kubernetes cluster?

I believe Kubernetes is a fantastic first step. But it’s also not the last. It is important to think about why that is.

In an idealized world you might say an application looks like this. You’ve written your Go Application. You put it inside a container. You’ve written some YAML files such that you can deploy that on Kubernetes. And then that Kubernetes cluster is running on some compute resource from some cloud provider. So the thinking here is what’s stopping me from taking my Kubernetes deployment and putting that on another cloud’s cluster, isn’t that portable cloud right there?

Well in reality many of the applications actually ending up looking like this. Teams are usually under intense pressure to be shipping features. At the end of the day, that really is valuable that the developers ship great futures to our users.

While these teams are fully aware of the importance of separation of concerns, loose coupling between a cloud provider and an application, in practice you have these fantastic managed services that these cloud vendors provide .. We are talking about databases, blob storage, runtime configurations these kinds of things that developers often end up just using these services..

After all they are fantastic reliable services and you wanna be shipping code. So rather than having nice loose coupling between layers, you have your applications having these hooks into your cloud provider. Inside your application code, you have some particular SDK invocations that may be calling out to the database, blob storage or whatever. As a result your nice Go application now ends up having knowledge of this underlying cloud provider which makes it hard to port to another cloud provider.

So running an app on multiple clouds often means:

- Rewriting it– which we all know is a tremendous risk. It’s also very expensive or

- Not doing it at all which means you have thrown the whole idea of having multi cloud portability out the window

So what can we do about this?

We have seen that decoupling applications from cloud providers is a good way to achieve portability. BTW, the concept of portability is neither new to Go nor to Go developers.

With Go standard library you can write an application, and just compile it for Mac, compile it for Linux or Windows or different architectures, and it just runs, and it runs without really any extra code. So, you know, the promise of true portability, the Go standard library delivered well on. What if we have a standard library for cloud that can help us achieve this portability across the clouds?

This is where Go CDK comes in. Go Cloud Development Kit (Go CDK) often referred to as “Go cloud” is an open source project building libraries and tools to improve the experience of developing for the cloud with Go. Go Cloud provides commonly used, vendor-neutral generic APIs that you can deploy across cloud providers. It makes it possible for teams to meet their feature development goals while also preserving the long-term flexibility for multi-cloud and hybrid-cloud architectures. So Go applications built with this CDK can be migrated to the cloud providers that best meet their needs.

What does that mean exactly? Remember the current state of the world for our Go apps now, where the application is coded to your particular cloud provider? The idea of Go cloud here is that same idea of separation of concerns.

You write your Go applications calling into this portable APIs for Go cloud. And then Go cloud has this cloud specific definitions for your cloud provider databases, blob storage etc. So when you want to switch out clouds or when you want to deploy to multiple clouds you have this very clear scene between your application logic and the setup code that is working with all these particular specific clouds.

What can I do with Go CDK?

Go cloud provides generic APIs for commonly used services through packages. Let’s take a look at these packages and understand what we can do we them.

blob:blob package provides us an abstraction over commonly used blob storage services across Clouds and even on-prem solutions. Using this blob package we can develop your application locally using files, then deploy it to multiple Cloud providers with just minimal initialization reconfiguration.docstore:docstore package provides an abstraction layer over common document stores like Google Cloud Firestore, Amazon DynamoDB, Azure Cosmos DB and MongoDB.mysql/postgresql:mysql, postgresql packages provides an abstraction layer over common relational stores like GCP CloudSQL, AWS RDS and Azure Database. If you are familiar with go’s standard sql library this might seem very similar. Infact it is. It only differs from the standard library’s sql package in that it automatically instruments the connection with OpenCensus metrics.pubsub:pubsub package provides an easy and portable way to interact with various publish/subscribe systems like AWS SQS, SNS, Google cloud pub/sub, Azure service bus, rabbit mq, NATS and Kafka!runtimevar:runtimevar package provides an easy and portable way to watch runtime configuration variables. It works with a local file. A blob bucket, GCP’s Runtime configurator, AWS Parameter Store, etcd and any http based configuration providers. My favorite feature from this package is the ability to watch for latest changes and fetch them periodically. Let’s say we save some application configuration in a s3 bucket. Using go cloud all we need to do is connect to the s3 bucket where our configuration resides using blob package and hook that with this runtimevar package. Go cloud will then periodically re-fetch the contents of the s3 bucket and update our app configuration. How cool is that !?secrets:Secrets package provides access to key management services in a portable way. It supports encryption and decryption of secrets for various services, including Cloud(GCP, AWS & Azure’s key management solutions) and on-prem solutions (like Hashicorp Vault)

Show me some code please!

Now that we appreciate the flexibility and broad support for services across clouds that comes with Go cloud, let’s see how it will help us achieve this portability by building simple a command line application that uploads files to blob storage on GCP, AWS, and Azure.

When we’re done, our command line application will work like this:

# uploads to GCS

./mcu gs://"$BUCKET_NAME" "$PATH_TO_FILE"

# uploads to S3

./mcu s3://"$BUCKET_NAME" "$PATH_TO_FILE"

# uploads to Azure

./mcu azblob://"$BUCKET_NAME" "$PATH_TO_FILE"

We invoke the binary by passing the blob storage URL as an argument and the uploader will understand which cloud provider we are using based on the URL argument and uploads the file to the correct destination.

Let’s get started on some Go code. We start with a skeleton for our program to read from command line arguments, to configure the bucket URL. We add small validation for the command line args and read those args into variables. Nothing fancy.

package main

import (

"log"

"os"

)

func main() {

if len(os.Args) != 3 {

log.Fatal("usage: mcu BUCKET_URL FILE_PATH")

}

bucketURL := os.Args[1]

file := os.Args[2]

_, _ = bucketURL, file

}

Now that we have a basic skeleton in place, let’s start filling in the pieces. To talk to the cloud provider blob storage service we import the blob package from Go cloud. Using the openBucket method that blob package exposes and passing the bucket URL to it we open a connection to the bucket. And finally, we write some error handling and add a defer statement to close the bucket connection when we exit the main function.

package main

import (

// previous imports omitted

"gocloud.dev/blob"

)

func main() {

// previous code emitted

// open a connection to the bucket

b, err := blob.OpenBucket(context.Background(), bucketURL)

if err != nil {

log.Fatalf("Failed to setup Bucket: %s", err)

}

defer b.Close()

}

)

This is all we need in the main function to connect to the bucket. However, as written, this function call will always fail.Go CDK does not link in any cloud-specific implementations of blob.OpenBucket unless we specifically depend on them. This ensures we’re only depending on the code we need. I will show how we can link in the cloud provider specific implementation later. With the setup done, we’re ready to use the bucket connection.

- Note, as a design principle of the Go CDK, blob.Bucket does not support creating a bucket and instead focuses solely on interacting with it. This separates the concerns of provisioning infrastructure and using infrastructure.

Now let’s finish the implementation by adding the missing code. We need to read our file into a slice of bytes before uploading it.

package main

import (

// previous imports omitted

"os"

"io/ioutil"

)

func main() {

// previous code omitted

// prepare the file for upload

data, err := ioutil.ReadFile(file)

if err!= nil {

log.Fatalf("Failed to read file: %s", err)

}

}

Now, we have data, our file in a slice of bytes. Let’s get to the fun part and write those bytes to the bucket! First, we create a writer based on the bucket connection. In addition to a context.Context type, the method takes the key under which the data will be stored and the mime type of the data. The call to NewWriter creates a blob.Writer, which implements io.Writer. With the writer, we call Write passing in the data. In response, we get the number of bytes written and any error that might have occurred. Finally, we close the writer with Close and check the error.

package main

// No new imports

func main() {

// previous code omitted

w,err := b.NewWriter(ctx, file, nil)

if err!= nil {

log.Fatalf("Failed to obtain writer: %s", err)

}

_, err = w.Write(data)

if err!= nil {

log.Fatalf("Failed to write to bucket: %s", err)

}

if err:= w.Close(); err!= nil {

log.Fatalf("Failed to close writer: %s", err)

}

}

If you are following this closely, you might have noticed that there is no cloud provider specific implementation code here. If we run this code it will fail because we haven’t told go cloud about which cloud provider’s blob service we want to use with this uploader app. Now let’s fix that. Let’s say we want to run this application in both AWS and Google Cloud first and we add support for azure later. So all we need to do is this:

package main

import (

// previous imports omitted

// import the blob packages we want to be able to open

_ "gocloud.dev/blob/gcsblob"

_ "gocloud.dev/blob/s3blob"

)

func main() {

// App code omitted

}

By just adding blank imports for aws s3 and gcp’s blob packages our uploader application will work with both aws s3 and gcp’s blob seamlessly. This concept of blank import almost seems like magic. To understand what’s happening under the hood let’s understand what blank imports in Go are how they are being used here to achieve this portability.

In Go blank identifier can be assigned or declared with any value of any type, with the value discarded harmlessly. It’s a bit like writing to the Unix /dev/null file. It represents a write-only value to be used as a place-holder where a variable is needed but the actual value is irrelevant. It is an error to import a package without using it. Unused imports bloat the program and slow compilation. However to silence complaints about the unused imports, we can use a blank identifier to refer to a symbol from the imported package. By convention, the global declarations to silence import errors should come right after the imports and be commented, both to make them easy to find and as a reminder to clean things up later. An unused import like fmt or io in this example should eventually be used or removed: blank assignments identify code as a work in progress.

package main

import (

"fmt"

"io"

"log"

"os"

)

var _ = fmt.Printf // WIP, Delete when done

var _ = io.Reader // WIP, Delete when done

func main() {

fd, err := ops.Open("test.go")

if err != nil {

log.Fatal(err)

}

// WIP, use fd

_ = fd

}

But sometimes it is useful to import a package only for its side effects, without any explicit use. When I say ‘import side effects’ I essentially am referring to code/features that are used statically. Meaning just the import of the package will cause some code to execute on app start putting my system in a state different than it would be, without having imported that package. This code can be an init() function which can do some setup work, or lay down some config files, modify resource on disc, etc. BTW, in Go any imported packages will have their init methods called prior to main being called. So Whatever is in the init() is a side effect. This form of import makes clear that the package is being imported for its side effects alone.

So in the case of our multi cloud uploader example when we import s3blob, the underscore import is used for the side-effect of registering the aws’s s3 driver as a blob driver in the init() function, without importing any other functions: Once it’s registered in this way, s3 bucket can be used with the go cloud’s blob portable type in our code without having to talk to s3 specific implementation directly. This separation of concerns is actually what makes the portability possible under the hood. Now that we have figured out the magic behind blank imports, adding support for Azure blobs is as simple as importing azure blob driver package, thanks to the magic that happens under the hood.

That’s it! we have a program that can seamlessly switch between multiple Cloud storage providers using just one code path. Without the Go CDK, we would have had to write a code path for Amazon’s Simple Storage Service (S3) and another code path for Google Cloud Storage (GCS). That would work, but it would be tedious. We would have to learn the semantics of uploading files to both blob storage services. Even worse, we would have two code paths that effectively do the same thing, but would have to be maintained separately. It would be much nicer if we could write the upload logic once and reuse it across providers. That’s exactly the kind of separation of concerns that the Go CDK makes possible. I hope this post demonstrates how having one type for multiple clouds is a huge win for simplicity and maintainability.

By writing an application using a portable library we retain the option of using infrastructure in whichever cloud that best fits our needs all without having to worry about a rewrite.

References: